Amazon.com announced a new artificial intelligence chip for its cloud computing service on Nov 28, as the race to dominate the artificial intelligence market with Microsoft heats up.

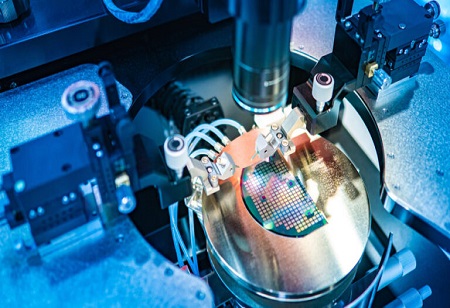

Amazon Web Services (AWS) Chief Executive Adam Selipsky announced Trainium2, the second generation of chip designed for training AI systems, at a conference in Las Vegas. According to Selipsky, the new version is four times faster than its predecessor while being twice as energy efficient.

The AWS move comes just weeks after Microsoft unveiled Maia, its own AI chip. The Trainium2 chip will also face competition from Alphabet's Google, which has been providing its Tensor Processing Unit (TPU) to cloud computing customers since 2018.

According to Selipsky, AWS will begin offering the new training chips next year. The proliferation of custom chips coincides with a scramble for computing power to develop technologies such as large language models, which serve as the foundation for services similar to ChatGPT.

Cloud computing companies are offering their chips to supplement Nvidia, the market leader in AI chips, whose products have been in short supply for the past year. AWS also announced on Tuesday that it will begin offering Nvidia's latest chips on its cloud service.

Selipsky on Nov 28 also announced Graviton4, the cloud firm's fourth custom central processor chip, which it said is 30 per cent faster than its predecessor. The news comes weeks after Microsoft announced its own custom chip called Cobalt designed to compete with Amazon's Graviton series.

Both AWS and Microsoft are using technology from Arm Ltd in their chips, part of an ongoing trend away from chips made by Intel and Advanced Micro Devices in cloud computing. Oracle is using chips from startup Ampere Computing for its cloud service.

We use cookies to ensure you get the best experience on our website. Read more...